Revisiting the validity of different hiring tools: New insights into what works best

Science series materials are brought to you by TestGorilla’s Assessment Team: A group of IO psychology, data science, psychometric experts, and assessment development specialists with a deep understanding of the science behind skills-based hiring.

Employers are in the prediction business: They have to predict whether a candidate will be successful in a job based on limited information about their capabilities. To improve the accuracy of these predictions, it helps to prioritize hiring tools (e.g., pre-employment tests and assessments) with good criterion-related validity in order to get the greatest return on your investment.

In this blog post, we discuss recent advances in the assessment field that have reshaped our understanding of effective hiring practices. We give you an overview of recent scientific findings regarding the most predictive hiring tools and provide practical advice on how to use them in your hiring process.

Table of contents

- What is criterion-related validity?

- What did we know about the criterion-related validity of different hiring tools until recently?

- What does the latest science say?

- Why are the results of these two studies so different?

- How can TestGorilla help you apply best practices based on the latest research?

- Where to next?

What is criterion-related validity?

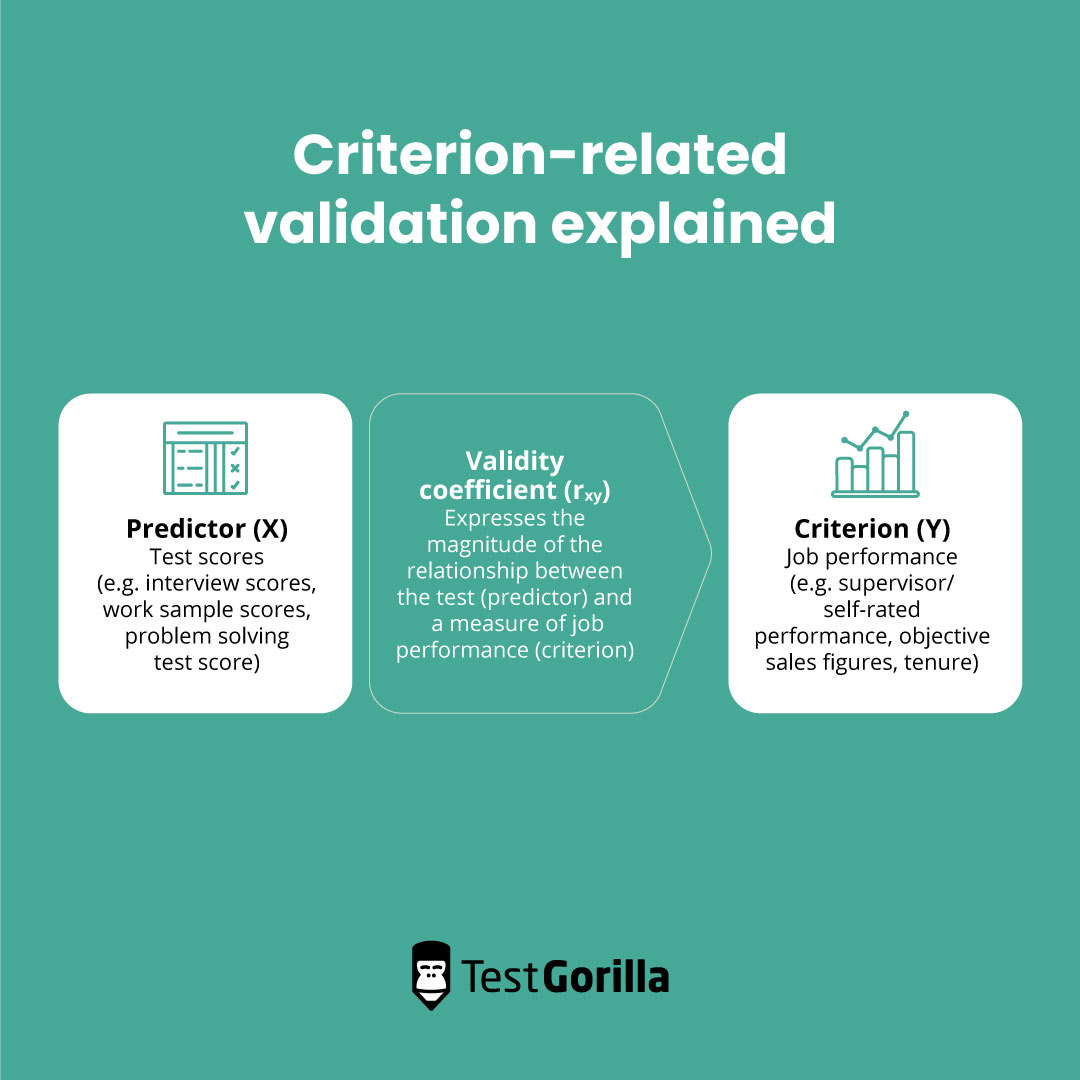

While there are various types of validity (see this blog for a more general introduction to validity), criterion-related validity often takes the spotlight in practical applications. Criterion-related validity refers to the extent to which we can make accurate predictions about candidates' expected job performance based on the scores obtained from different hiring tools. In this case, hiring tools refer to pre-employment tests and assessments such as cognitive ability tests, work samples, interviews, reference checks, and personality assessments.

Criterion-related validity is typically evaluated by examining the relationship between scores obtained in a hiring process (called predictors) and measures of actual job performance (called the criterion). It is frequently expressed as a validity coefficient. A validity coefficient is a correlation coefficient that ranges from 0 to 1 and quantifies the strength of the relationship between test scores and job performance. Higher values indicate a stronger relationship between scores on pre-employment tests and measures of job performance. For example, after conducting a validation study, Company X may find that candidates scoring higher on the Sales Management test perform better in a sales manager role (e.g., with an observed validity coefficient of .30).

Fig. 1: Criterion-related validation explained

What did we know about the criterion-related validity of different hiring tools until recently?

Practitioners and academics have been studying the effectiveness of different hiring tools for decades. Different studies have reached different conclusions: some found a specific hiring method to be highly predictive, some found they're just okay, and others found their criterion-related validity to be negligible.

When this happens, meta-analytic studies can offer a more accurate and credible conclusion about a specific topic or question. A meta-analysis is like a super-study that gathers all individual studies about a specific topic and analyzes them collectively. Looking at all the results together is like asking the opinion of an entire room of experts instead of just one. This way, you get a more rounded view of what the science says.

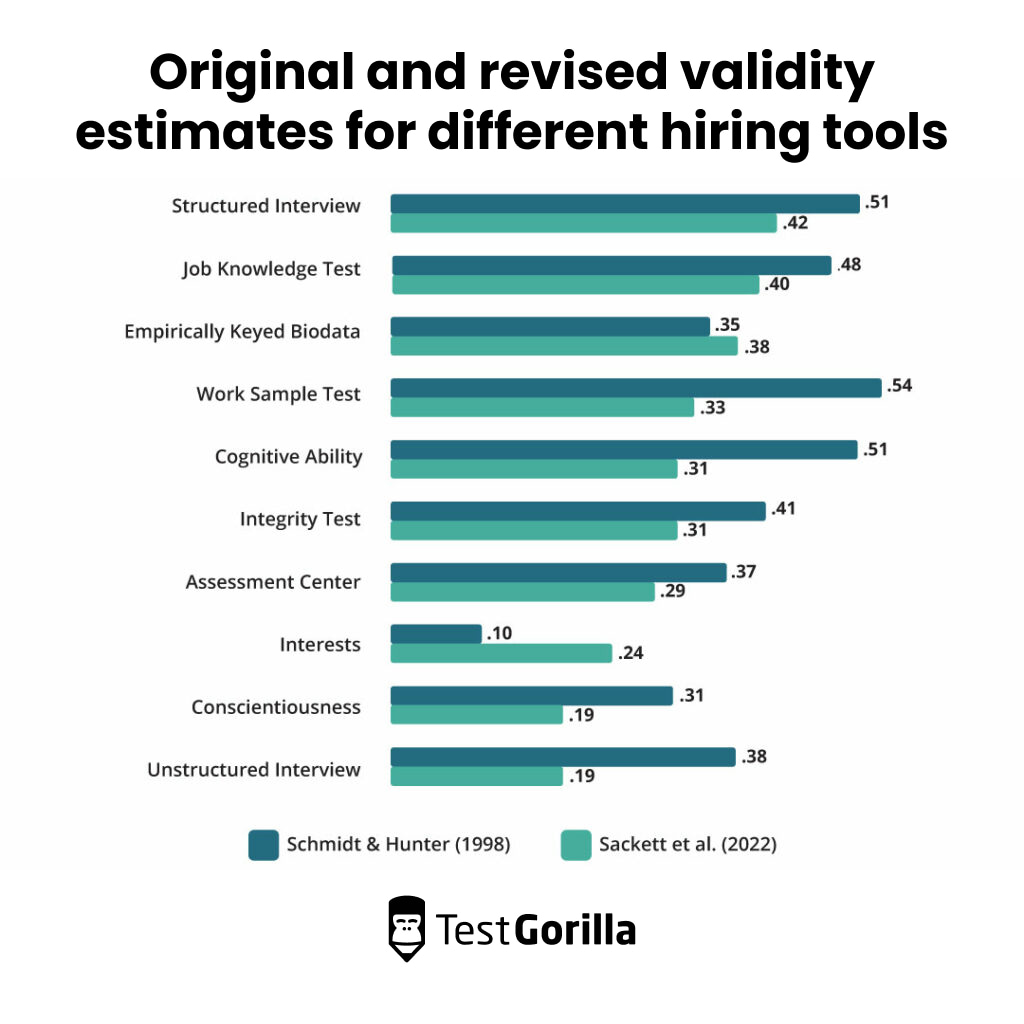

For a long time, one of the most important papers on the criterion-related validity of different hiring tools has been the meta-analysis conducted by Schmidt and Hunter (1998). They combined several studies and calculated the mean (i.e., average) validity coefficients for different hiring tools in relation to overall job performance. Their research provided some pretty ground-breaking insights into the effectiveness of different hiring tools. More specifically, they concluded that work sample tests, cognitive ability tests, and structured interviews are the best predictors of overall job performance. The mean validity coefficients reported by Schmidt and Hunter (1998) can be found in Figure 2.

Although many new studies and meta-analyses on the topic have been published since the Schmidt and Hunter paper in 1998, they were usually narrower in scope (e.g., focusing on specific types of situational judgment tests, personality tests, or interviews) and did not involve such a comprehensive comparison of different hiring tools. As such, decades of research and hiring practices have been built on the findings of the Schmidt & Hunter meta-analyses.

What does the latest science say?

In 2022, a new meta-analysis conducted by Sackett and colleagues (2022) was published, challenging the findings of Schmidt and Hunter (1998). Sackett and colleagues (2022) found that, on average, all validity coefficients are lower than in the original meta-analysis, with the rank order of some of the most successful hiring procedures changing: Structured interviews were found to be the most predictive of overall job performance, followed by job knowledge tests and empirically keyed biodata. A comparison of the validity coefficients found by Schmidt and Hunter (1998) and Sackett and colleagues (2022) is provided in Figure 2.

Figure 2. Comparison of mean validity coefficients of different hiring tools from Schmidt & Hunter (1998) and Sackett et al. (2022).

Why are the results of these two studies so different?

The difference in the two studies’ results is due to the use of different statistical tools based on a better understanding of the nature of the original studies examined. Also, new studies have been conducted since Schmidt and Hunter (1998) and provided Sackett and colleagues (2022) with new and updated data.

Lastly, the world of work has substantially changed since 1998. Digitalization has resulted in more knowledge-based white-collar jobs in comparison to 30 or 50 years ago. Jobs today require different competencies and skills than manufacturing jobs did. They are also evaluated differently. For example, while the job performance of an assembly line worker is pretty straightforward to measure by counting the number of assembled parts, evaluating the effectiveness of a UI/UX designer or an AI ethicist is a more complex task.

Hunter & Schmidt’s findings were the foundation on which we built our assessment practices for many years. But as the world and our understanding of the world is ever-evolving, these foundations are subject to change and should be updated as new evidence emerges.

How can TestGorilla help you apply best practices based on the latest research?

The latest meta-analytic findings offer several key practical takeaways that you can implement to improve your hiring process:

1. Combine multiple measures in the selection process

While each selection procedure offers some validity, they best work in combination.

TestGorilla recommends a holistic, multi-measure approach to hiring. While the above validities apply to single hiring tools in isolation, research shows that when multiple measures are combined together, the validity coefficient can increase substantially. For example, Sacket et al. (2022) showed that the validity coefficient can reach up to .47 on average based on the combination of hiring tools they considered.

Both academic and practical advice is always to combine more than one hiring method in the selection process to achieve more valid results, and to use pre-employment assessments together with other information obtained during the hiring process. With TestGorilla, this is as easy as adding multiple tests to the assessment and using those results to target interview questions.

2. Use job-relevant hiring tools

The hiring process benefits the most from job-relevant measures. The more relevant the content of the hiring method to the role you’re actually hiring for, the higher the likelihood of a strong relationship with future job performance. This means that the pre-employment tests and assessments you use should be grounded in a strong understanding of the tasks and skills needed in the role you’re hiring for (see our guide to job tasks analysis).

TestGorilla offers a diverse library of tests with clear descriptions that help you choose the most relevant tests for your roles. Additionally, we offer assessment templates to get you started with job-relevant tests even when you’re unfamiliar with the position you’re hiring for.

3. Use structured interviews

A well-prepared structured interview is one of the best ways to evaluate your candidates. Structured interviews should be carefully developed based on job-analytic information and conducted by skilled interviewers. However, they do take a lot of time to prepare properly and are not easily scalable. By using TestGorilla assessments and combining them with structured interviews, you can increase the validity of your hiring process.

More specifically, structured interviews can be particularly effective when complemented by targeted questions aimed at further probing the insights derived from the assessment of candidates’ skills and personality.

4. Use job knowledge and skills tests

Job knowledge and skills tests, like those TestGorilla offers in our test library, are the best option when speed and volume are important. In our library, you can find job knowledge skills assessing role-specific skills, software skills, programming skills, language skills, typing skills, and more. Unlike structured interviews, they are fast to administer and score, easily scalable, and can be deployed with little preparation in advance. Providing objective information on job knowledge and skills, they are also easy to combine with other hiring tools which leads to an overall boost in the validity of the hiring process.

5. Use cognitive ability tests judiciously

While cognitive ability has been praised for decades as one of the most important predictors of job success, new findings suggest it plays a more modest role in predicting overall job performance.

When including cognitive ability in an assessment, it is important to have a good understanding of the cognitive requirements of a role and whether the assessment of other skills, abilities, or preferences may deliver a higher return on investment and less adverse impact. Nevertheless, assessing cognitive ability might still be the best option when hiring for positions where applicants may not have prior experience and/or training in job-specific skills that would otherwise be easily assessed with job knowledge and work sample tests.

Moreover, cognitive ability has long been found to be a very strong predictor of performance in learning settings (e.g., higher education, training at work, knowledge acquisition on the job). Cognitive ability tests can thus be helpful to gauge the learning capability of a candidate and allow you to hire people with high potential for growth in their roles.

6. When using personality and preferences measures, match it to the role and context

Personality, preferences, and interest all have modest validity coefficients, but the validity of these tests substantially increases when contextualized measures (as opposed to overall measures) are used. Contextualized means that measures have been adapted to work best in the selection context and their content often makes specific reference to the work setting.

TestGorilla’s current personality tests (i.e., the Big Five, Enneagram, 16 types, and DISC personality tests) are overall measures of personality. These tests can be used to gain a deeper understanding of test-takers' personality inclinations, and can help enrich learning and development conversations.

In contrast, our Motivation, Culture add, and upcoming behavioral competency test are contextualized measures, which take the requirements of your organization and/or the specific role into consideration. These tests can offer you valuable insights into a candidate's workplace behavior and help you better understand your candidates before meeting them in an interview.

An added bonus is that our internal research also shows that including a personality test or a Motivation test in your assessments is a key way to increase candidates’ satisfaction with their assessment experience.

Where to next?

In the ever-evolving realm of science, change is the only constant. The Sackett et al. (2022) study exemplifies this principle by revolutionizing our understanding of validity in selection procedures and providing practical guidelines on how to develop a hiring process that yields the best results.

Armed with this new knowledge, recruiters and HR professionals can navigate the complex landscape of hiring with greater precision and confidence. Remember, in the quest for identifying top talent, a multifaceted and science-based approach holds the key to unlocking hiring excellence.

The Assessment Team constantly works to stay abreast of the latest developments surrounding the science of hiring, and as the conversation around this topic continues to evolve, we’ll be standing by to offer you new insights. So, stay tuned.

If you want to read more about the science behind TestGorilla, check out our other science series blogs:

Sources

Borneman, M.J. (2010). Criterion validity. In N.J. Salkind, B. Frey, & D. M. Dougherty (Eds.) Encyclopedia of research design (pp. 291-296). Thousand Oaks, CA: Sage Publications, Inc. doi: 10.4135/9781412961288

Sackett, P. R., Zhang, C., Berry, C. M., & Lievens, F. (2022). Revisiting meta-analytic estimates of validity in personnel selection: Addressing systematic overcorrection for restriction of range. Journal of Applied Psychology, 107(11), 2040. doi: 10.1037/apl0000994

Sackett, P. R., Zhang, C., Berry, C. M., & Lievens, F. (2023). Revisiting the design of selection systems in light of new findings regarding the validity of widely used predictors. Industrial and Organizational Psychology, 1-18. doi:10.1017/iop.2023.24

Related posts

Hire the best candidates with TestGorilla.

Create pre-employment assessments in minutes to screen candidates, save time, and hire the best talent.

Latest posts

The best advice in pre-employment testing, in your inbox.

No spam. Unsubscribe at any time.

Hire the best. No bias. No stress.

Our screening tests identify the best candidates and make your hiring decisions faster, easier, and bias-free.

Free resources

Anti-cheating checklist

This checklist covers key features you should look for when choosing a skills testing platform

Onboarding checklist

This resource will help you develop an onboarding checklist for new hires.

How to find candidates with strong attention to detail

How to assess your candidates' attention to detail.

How to get HR certified

Learn how to get human resources certified through HRCI or SHRM.

Improve quality of hire

Learn how you can improve the level of talent at your company.

Case study: How CapitalT reduces hiring bias

Learn how CapitalT reduced hiring bias with online skills assessments.

Resume screening guide

Learn how to make the resume process more efficient and more effective.

Important recruitment metrics

Improve your hiring strategy with these 7 critical recruitment metrics.

Case study: How Sukhi reduces shortlisting time

Learn how Sukhi decreased time spent reviewing resumes by 83%!

12 pre-employment testing hacks

Hire more efficiently with these hacks that 99% of recruiters aren't using.

The benefits of diversity

Make a business case for diversity and inclusion initiatives with this data.